Containers to the rescue!!!!

What are containers,Why do we have containers, what they do and where can we use them????

Introduction

In this first blog of the series Understanding Google Kubernetes Engine, we would quickly get up to speed with containers before we start getting Kubernetes to orchestrate them.

One of the most common problems in software engineering is the final packaging of the applications. The problem is often neglected until the last minute because we tend to focus on building and designing the software. But final packaging and deployment are often as difficult and complex as the original development. Luckily, we have many tools available to address this problem, one of which relies on containers.

But In the Beginning, there was a server!!!!!

Applications are the heart of businesses. If the applications break, companies break. For years, engineers have had the job of providing infrastructure for applications big or small, distributed or localized. In the past, we would run only one application per server. So, every time the business needed a new application or the demand increased, the IT department would do the only thing it could do - buy big fast servers that cost a lot of money. A seasoned admin would recall what a nice nightmare it was.

But what if things go wrong? The hardware isn't that reliable and achieving high availability while managing your physical servers is tough. We haven't even mentioned what happens to this massive infrastructure during off-peak times. Your servers are just sitting there in a pile of dust when the demand recedes.

A waste of company capital and environmental resources!!!!!

Virtualization and VMware

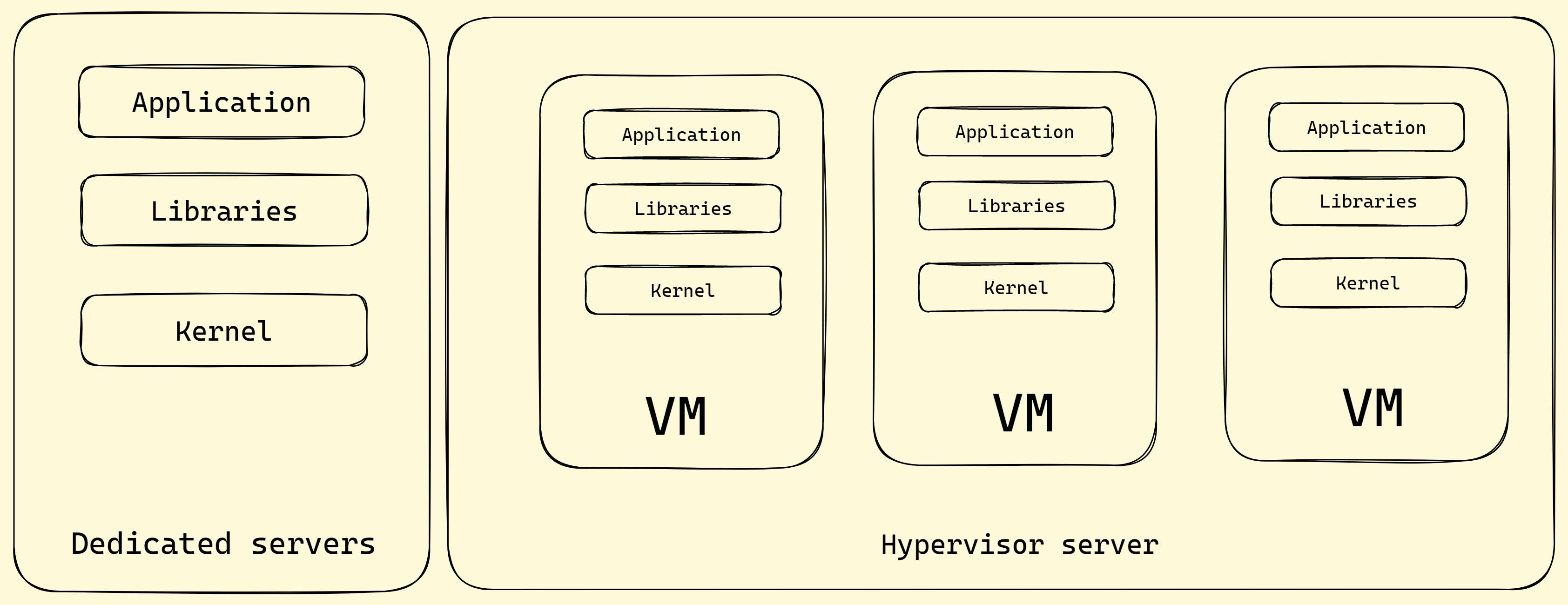

One solution that people came around with was Virtualization, instead of running applications directly on a server, that server became a hypervisor, which just means a host to many virtual servers or as they call it virtual machines. Virtualization was made popular thanks to VMware.

Abstracting the hardware away, you could quickly deploy virtual machines.

VMs were isolated and portable.

With better hardware utilization, IT departments no longer need to procure a brand new server every time the business needed a new application.

You can use a pool of resources to deploy multiple applications rather than tying a single application to a physical server.

Each VMs still contains a complete operating system alongside our application code.

Even though we get better hardware utilization, is it an improvement??

Problems with the virtualization

Since each VM is containing a single operating system, including libraries and dependencies, this is going to be difficult to run more than one application per VM. How do you balance out the hardware utilization when an application has a higher load than the other?

It is back to square one, we are still running a single application per VM, just running redundant operating systems everywhere. We would end up with complex configuration management systems every time we deploy our application to a new VM. There's got to be a better way for sure,

Containers, assemble !!!!!

In case you haven't guessed, the better way to isolate and run your applications with maximum hardware efficiency and automation is of course containers.

With the container approach, we abstract away the operating system as well as the hardware. You just take the pieces you need to run your application and you run it in isolation using a feature of the Linux kernel that provides multiple isolated userspace instances. This is the concept of containerization, your isolated application is a container.

Containers technologies have been used by Google for a very long time to address the shortcomings of the VM model.

Google has contributed many container-related technologies to the Linux kernel without which we would not have the modern container today. Some of the major contributions that enabled the growth of containers were kernel namespaces, Control groups, and union filesystems.

Benefits of using containers

Write once, run anywhere

Easily deploy to dev, test and Prod

Deployed efficiently to GKE, VMs, bare metal

Promote microservices

So How do you even make a container??? Where does Docker fit in this whole scenario???

Despite all of this, containers remained complex and far reached to most of the organizations, until Docker democratized them and made them accessible to the masses. Docker created a fantastic toolset around Linux containerization features. Docker made it developer-friendly to create a container and manage its deployment. They created tools for handling container images and registries.

DOCKER Inc. made containers simple!!!

Fire up your first container.....

Containers start life as an image, just like any other package data, the difference here is containers use a layered read-only filesystem. Whenever you run a container, the read-write layer is added to the top of the image, that's how you trust that whenever you run the container image, you would get the same behavior.

You can create a container image using a simple file named Dockerfile. It is a simple text configuration file.

Inherit from an Ubuntu Image

Copy everything in local directory to /app

Run make to compile our application

When the container runs, execute our python app.

To build the container image we need to execute,

docker build -t my-app .

Using the above command you build the container image, the "-t" is the tag you want to add to the image.

Above is a visualization, of what it looks like once the image is built. The layers are in the reverse order meaning docker adds up on the layers it built. Each layer gets its own unique id. In our example docker file, the first and largest layer is of the base image of Ubuntu then our application and then the changes they make command did.

Containers promote smaller shared images, if a change has to be made to this container, only the layer that changed has to be updated.

To run a container we need to execute,

docker run -d my-app

The "-d" just demonizes the process and puts it in the background. There are a lot of other parameters you can pass such as specifying ports, but this is all you need to run your first container.

You can learn more about how to use Docker - at https://labs.play-with-docker.com/

Lab -

In the next part of this blog, we will execute a lab on GCP.

Create an application in Docker

Run and interact with local containers

Tag the docker image and push it to a container registry

So, if you do not have a GCP account yet, go make one and start your free trial. If you need help creating a free trial GCP account, you can refer to - https://youtu.be/P2ADJdk5mYo

Containers in a Nutshell !!!

Containers are just another way of packaging our applications, but they are an efficient, developer-friendly way of packaging applications. It gets rid of the operational overhead of managing OS and dependencies from the development lifecycle. Containers guarantee consistency, you write once and you can run it everywhere.

Containers suck when applications have to write to a local disk, applications that require manual intervention to install.

Thanks for reading this blog, if you find it valuable then give it an applaud 👏

Resources:

If you want to deep dive into containers - https://iximiuz.com/en/posts/container-learning-path/

Docker Deep Dive book by Nigel Poulton - https://www.amazon.in/Docker-Deep-Dive-Nigel-Poulton-ebook/dp/B01LXWQUFF

Stay tuned for the rest of the series......