Using Azure Machine Learning Service for E2E Machine Learning.

Using Azure Machine Learning Service for E2E Machine Learning.

This blog is a part of the MSP Developer Stories initiative by the Microsoft Student Partners(India) Program (studentpartners.microsoft.com).

Note: For a Detailed Code tutorial Visit the documentation.

1. Introduction.

We will try to learn about the capabilities of Azure Machine learning Service that can help Data scientists and AI developers with End-to-End Machine learning.

When developing AI apps, we can use cognitive services but we can create custom machine learning models with our data because cognitive services won’t work in every possible scenario. In such cases, we have to train our ML algorithms. The AML service has SDK, CLI, and APIs that can help create these custom models.

We are going to learn how to use the Azure Machine Learning SDK for Python to carry out E2E machine learning.

2. Using the Azure Python SDK for E2E Machine Learning.

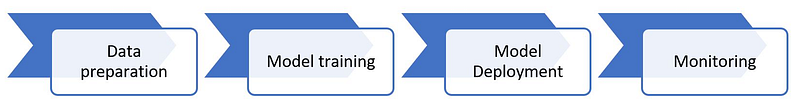

As shown in the above diagram, the steps for E2E Machine learning start with data preparation, which includes cleaning the data and featurization. The next step involves creating and training a machine learning model and after that deployment of the model (As a web service or as an App) the final step involves monitoring which includes analyzing how the model performs.

pip install --upgrade azureml-sdk

We can scale the compute for training by using a cluster of CPUs or GPUs. We can also easily track the run history of all experiments with the SDK. This run history is stored in a shareable workspace, which allows teams to share the resources and experiment results. Another advantage of the SDK is that it allows us to find the best model from multiple runs of our experiment, based on a metric that we specify, such as, for example, the highest accuracy rate or the lowest rate of errors.

Once we have a machine learning model that meets our needs, we can put it into operation using the Azure ML service. We can create a web service from the model that can be deployed in the Azure-managed computer or IoT device. The SDK can be installed in any IDE, so you can even deploy the model using the VSCode or PyCharm if you do not use Jupyter Notebooks or Databricks. For development testing scenarios, we can deploy the web service to the Azure Container Instance (ACI). To deploy the model in production, we can use Azure Kubernetes Service (AKS). After the web service is deployed to AKS, we can enable monitoring and see how the web service is performing.

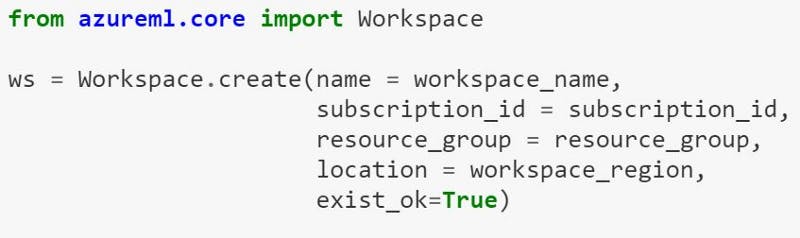

The following sample code shows how to use the SDK for E2E machine learning. Start by creating a workspace for your machine learning project. This will be created in your Azure subscription and can be shared with different users in your organization. This workspace logically groups the computes, experiments, datastores, models, images, and deployments that we will need for E2E machine learning:

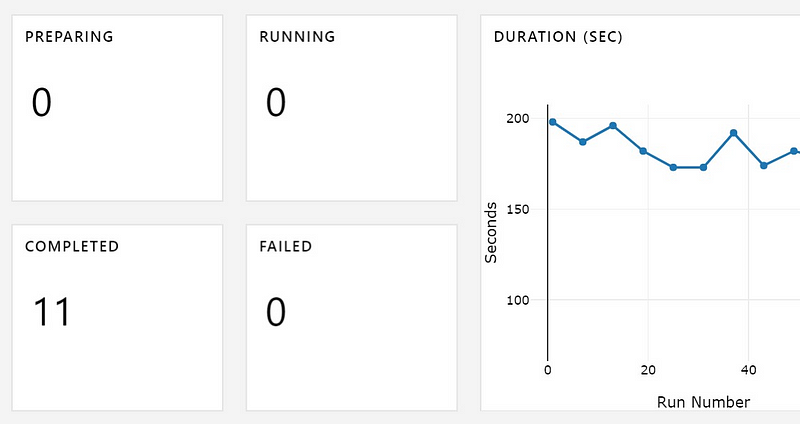

Once we have created a workspace, we can create a training compute that can scale automatically based on our training requirements. This compute can use either CPU or GPU, and we can use whichever framework we like. We can perform classical machine learning with scikit-learn, for example, or deep learning with TensorFlow. Any framework or library can be installed using pip and used with the SDK. We can submit the experiment runs to this compute and we will see the results both in our environment and in the Azure portal. We can also log metrics as part of our training script. Here are some sample screenshots of how experiments run, and how corresponding metrics may appear in the Azure portal. We can also access these metrics using code with the SDK.

The following screenshot shows the number of experiments and the duration it took to run each experiment:

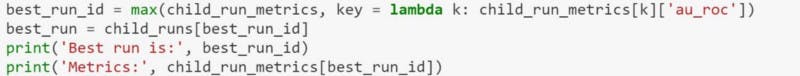

As mentioned, we can find the best run based on a particular metric of interest. An example of a code snippet used to find the best run is as follows:

Once we have found the best run, we can deploy the model as a web service, either to ACI or AKS. To do this, we need to provide a scoring script and an environment configuration, in addition to the model that we want to deploy. Here is an example of code that can be used to deploy models:

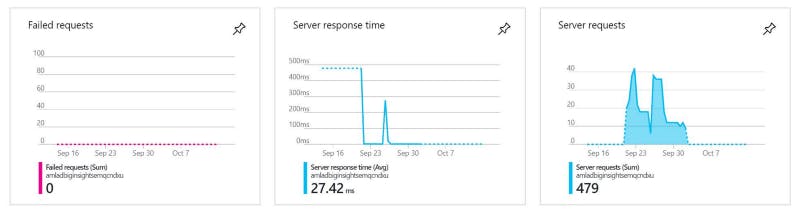

When a model is deployed in production to AKS and monitoring is enabled, we can view insights on how our web service is performing. We can also add custom monitoring for our model. The following screenshot shows how the web service has performed over a few days:

You can get more details about the Azure Machine Learning service at the following website: https://docs.microsoft.com/en-us/azure/machine-learning/service/overview-what-is-azure-ml.

You can also get sample notebooks to get started with the Azure Machine Learning SDK at the following website: https://github.com/Azure/MachineLearningNotebooks.

3. Conclusion

We have learned about the new capabilities of the Azure Machine Learning service, which makes it easy to perform E2E machine learning. We have also learned how professional data scientists and DevOps engineers can benefit from the experimentation and model management capabilities of the Azure Machine Learning SDK.